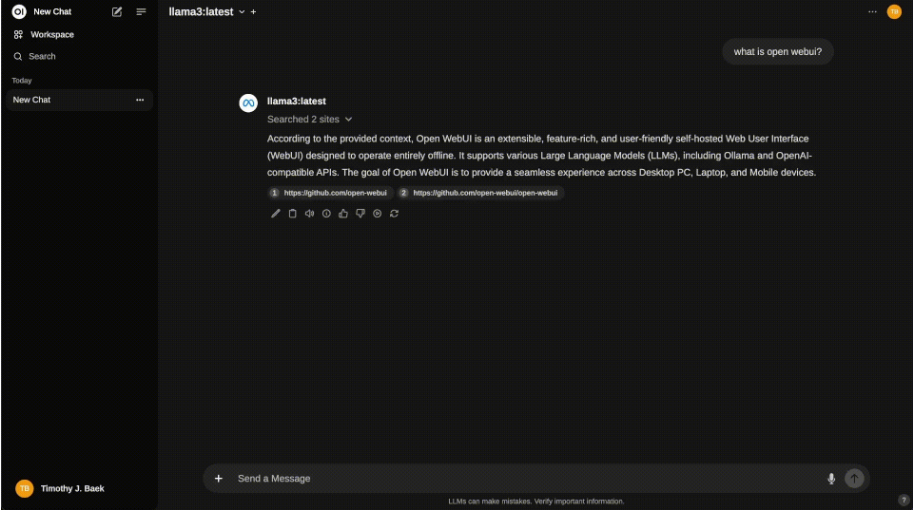

Open WebUI

Open WebUI is an extensible, self-hosted AI interface, used for AI User Interface . Open WebUI is an extensible, feature-rich, and user-friendly self-hosted AI platform designed to operate entirely offline. It supports various LLM runners like Ollama and OpenAI-compatible APIs, with built-in inference engine for RAG, making it a powerful AI deployment solution.

Prepare

When referring to this document to use Open WebUI, please read and ensure the following points:

-

Login to Websoft9 Console and find or install Open WebUI:

- Go to My Apps listing applications

- Go to App Store installing target application

-

This application is installed by Websoft9 console.

-

The purpose of this application complies with the MIT open source license agreement.

-

Configure the domain name or server security group opens external network ports for application access.

Getting started

Initial setup

-

When completed installation of Open WebUI at Websoft9 Console, get the applicaiton's Overview and Access information from My Apps

-

Follow the wizard to create an administrator account

-

In the upper left corner choose a model, search for

tinydolphin, click on it to download the model -

Select the

tinydolphinmodel to start using the chat

Running with GPU?

-

Select version cuda when create this application

-

Select Compose > Go to Edit Repository and change

docker-compose-gpu.ymltodocker-compose.yml -

Recreate this application

Configuration options

- Multilingual (√)

- Config Ollama URL: Settings > Admin Settings